Purpose

Our Software Division customizes and creates projects from different forms of neural data (EEG, MRI, and fNIRS) to build software-based solutions to problems in neuroscience, innovating and expanding what we know about the mind.

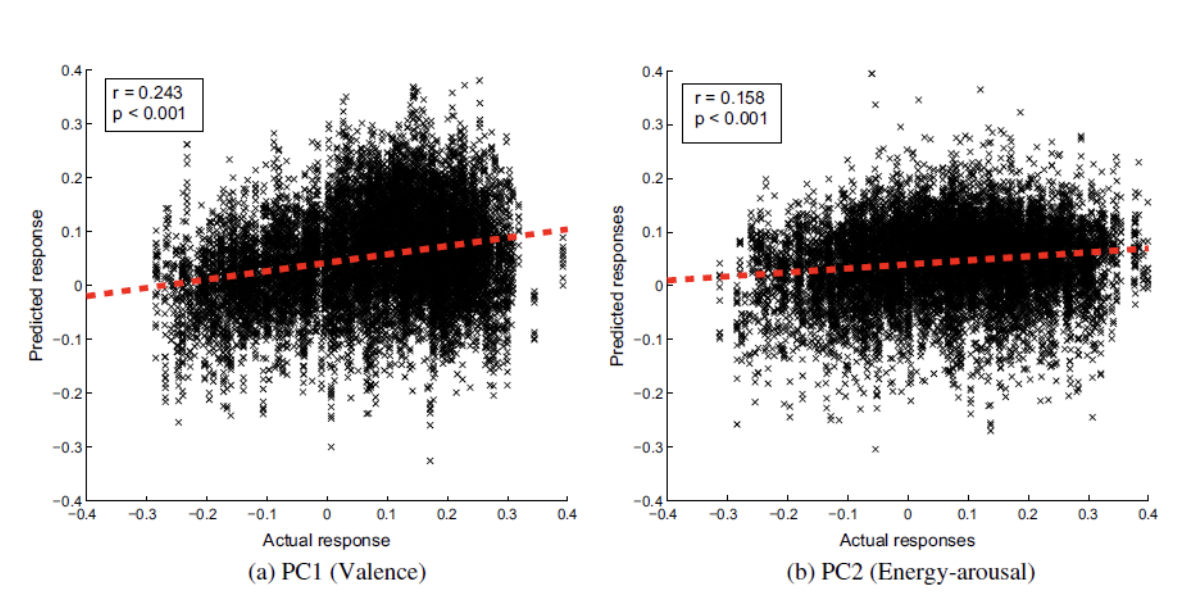

We currently have a wide variety of projects, including eye-tracking typing methods, reconstructing music from brain waves, and decoding speech.